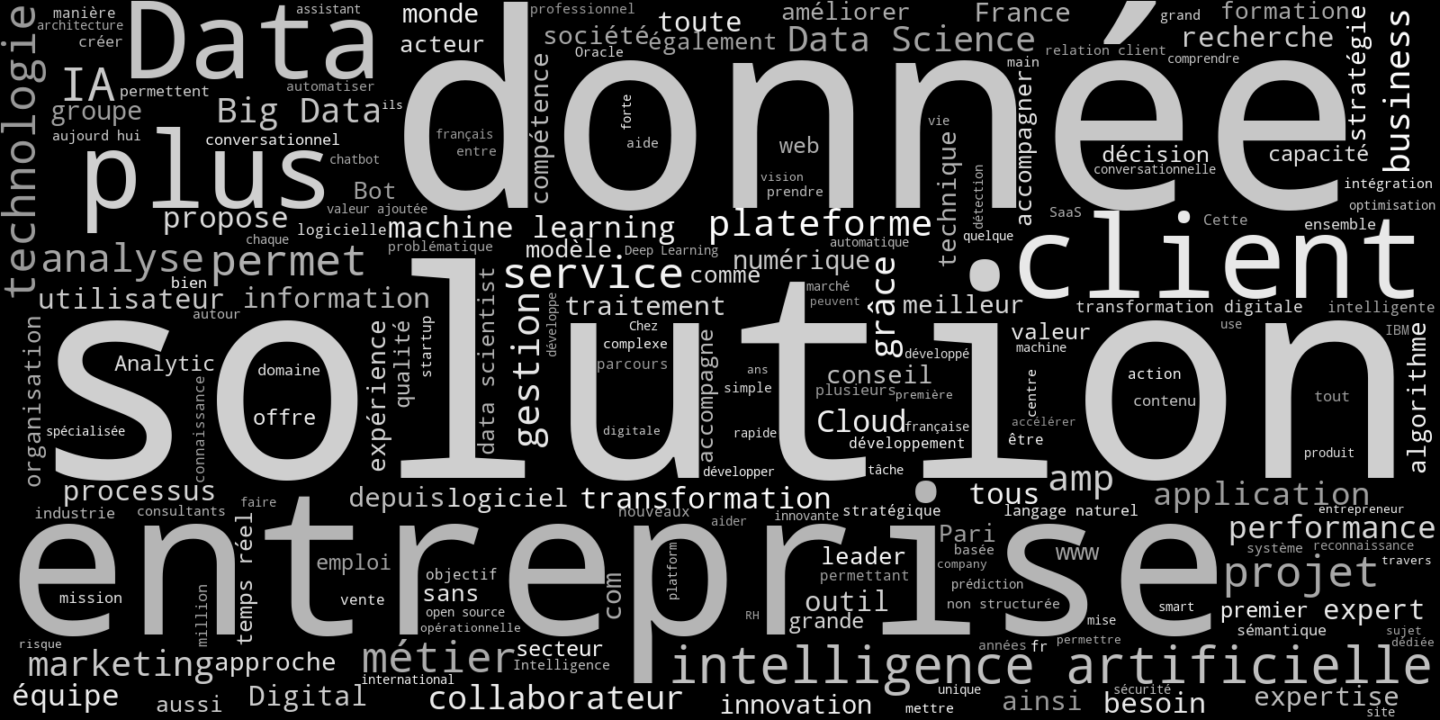

AI Paris 2019 in one picture

Posted on Mon 17 June 2019 in Meeting • 3 min read

Last week, I was at the AI Paris 2019 event to represent Kernix. We had great talks with so many people, and I barely had time to go around to look what other companies were working on. That is why I look at this afterwards. Can we have a big picture of the event without being there?

It happens that the list of the companies is on the website of the AI Paris 2019. With a little bit of Python code we can retrieve it. The following Python code retrieves the html content of the page we are interested in:

import urllib

url = "https://aiparis.fr/2019/sponsors-et-exposants/"

with urllib.request.urlopen(url) as response:

html = response.read().decode('utf-8')

Note that response.read() returns bytes, so we have to decode it to get a string. The encoding used is utf-8, which can be seen in the charset attribute of the meta tag (and fortunately it is the correct encoding, which is not always the case). Looking at the html, we can notice that the data is in a javascript variable. Let's get the line where it is defined:

import re

line = re.findall(".*Kernix.*", html)[0]

By default, the regex does not span multiple lines. The line variable is almost a json. We can get the data as a Python dictionary with the following:

import json

i = line.index('[')

d = '{"all":' + line[i:-1] + '}'

data = json.loads(d)

Let's now get the descriptions of the companies concatenated into a single string:

import re

def clean_html(html):

regex = re.compile('<.*?>')

text = re.sub(regex, '', html)

return text

corpus = [clean_html(e['description']) for e in data['all']]

text = " ".join(corpus)

The clean_html function is created since the descriptions contain html code and removing the tags make it more readable. A way to go when dealing with text is to remove "stopwords", i.e. words that do not contain meaningfull information:

from nltk.corpus import stopwords

stop_words = (set(stopwords.words('french'))

| {'le', 'leur', 'afin', 'les', 'leurs'}

| set(stopwords.words('english')))

NLTK library provides lists of stopwords. We use both the english and french lists since the content is mosly written in french, but some descriptions are in english. I added some french stopwords.

I am not a big fan of word clouds since we can only spot quickly a few words, but for this use case, we can get a pretty good big picture in very few lines:

Here is the code to generate it:

import random

import matplotlib.pyplot as plt

from wordcloud import WordCloud

def grey_color_func(word, font_size,

position, orientation,

random_state=None,

**kwargs):

return "hsl(0, 0%%, %d%%)" % random.randint(60, 100)

wordcloud = WordCloud(width=1600, height=800,

background_color="black",

stopwords=stop_words,

random_state=1,

color_func=grey_color_func).generate(text)

fig = plt.figure(figsize=(20, 10))

plt.imshow(wordcloud, interpolation="bilinear")

plt.axis("off")

plt.tight_layout(pad=0)

fig.savefig('wordcloud.png', dpi=fig.dpi)

plt.show()

The grey_color_func function enable a greyscale picture. A fixed random state enables reproductibility. Setting both the width, height and the figsize enable a high quality output. The margins are removed with the two lines:

plt.axis("off")

plt.tight_layout(pad=0)

Let's get back to the word cloud. We can spot immediately the words "solution" (it is the same in french and english), "data" (both in french "donnée" and english "data") and "company" ("entreprise" in french). The expressions "AI" ("IA" in french) and "artificial intelligence" ("intelligence artificielle") come only later which is quite surprising for an event focusing on artificial intelligence as a whole. In fact, AI is often seen as a solution to use data in an useful way.

Many actors are working on how to store data, manage and process it (which is very important), but only a few, from what I saw and heard, focus on creating value for people. I am always delighted to create something useful for people in they day-to-day life, whether it is a recommender system to discover things we never thought exist, or automate boring tasks, improve research in the pharmaceutical sector, and so many others. AI is about people!